|

What visualization system designs are most effective for in situ processing? In situ processing creates several new challenges that will require fresh approaches for visualization systems. One challenge is that increased pressure on resources -- an in situ system's use of memory, compute power, energy, or the interconnect network -- can affect the simulation. Another challenge is in integration. With post hoc processing, integration occurred via files, i.e., a simulation code wrote files and a visualization problem read files. In some in situ settings, integrations require linking visualization algorithms into the simulation code, requiring each simulation code to be extended to invoke in situ APIs and exchange data. Further, this approach can lead to practical issues regarding complex compilation processes and issues stemming from large binary size. This page describes three research thrusts on visualization systems:

PaViz

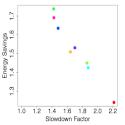

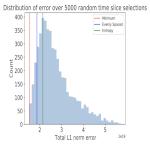

The PaViz system was a major part of Stephanie Labasan's dissertation research, which focused on power/performance tradeoffs for scientific visualization workloads on supercomputers. Her research took two distinct phases. In the first phase, she established that the data-intensive nature of visualization workloads can create useful propositions, e.g., for 10% slower performance, you can save 40% on energy. She did this by first studying one algorithm in depth and then performing a more comprehensive study on a wider class of algorithms. In the second phase, she considered overprovisioned supercomputers (where there are more compute resources than power) and designed approaches in the PaViz system which direct power to where it will improve overall performance the most. Her approach differed from previous works not only in that it considered visualization, but also in that it used prediction to direct power, as opposed to the standard practice of adaption. Stephanie then performed a thorough study comparing prediction and adaption for rendering workloads, finding that prediction works best. Ascent

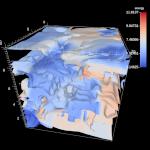

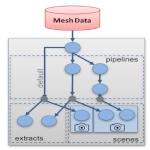

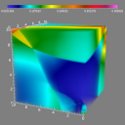

The Ascent library has a special focus on "flyweight in situ," meaning small API, small binary size, small execution overhead, and small memory footprint. CDUX alumnus Matt Larsen is the lead developer of Ascent, and the product began as during Matt's time as a Ph.D. student as Strawman, a "visualization mini-app." Ascent is central part of the Department of Energy’s strategy for in situ visualization at the exascale, and has been integrated with multiple simulation codes. Our students also often perform their research within Ascent, and run with real simulation codes on DOE supercomputers. This includes the research on Lagrangian flow and wavelets and the research on estimating and optimizing in situ costs. Ascent also heavily uses VTK-m, incorporating CDUX results on portable performance. Finally, CDUX members have researched approaches for "triggers" within Ascent, which are mechanisms for adapting when visualization is performed based on simulation properties. Specifically, Matt Larsen, Nicole Marsaglia, and others proposed a system for in situ triggers that was awarded Best Paper at ISAV18. More recently, Yuya Kawakami led an effort that used this system to begin benchmarking trigger efficacy. VisIt

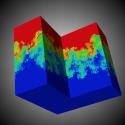

VisIt was originally developed by the Department of Energy (DOE) Advanced Simulation and Computing Initiative (ASCI) to visualize and analyze the results of terascale simulations. Hank Childs was a founding member of the VisIt development team, and he served as the project architect 2000-2013. VisIt was designed with a high degree of modularity to support rapid deployment of new visualization technology. This includes a plugin architecture for custom readers, data operators and plots as well as the ability to support multiple different user interfaces. Following a prototyping effort in the summer of 2000, an initial version of VisIt was developed and released in the fall of 2002. Since then, over 100 database readers, 60 operators and 20 plots have been added to the open source code. In addition, commercial, government and academic organizations in the US, Europe and elsewhere have developed and maintained proprietary plugins and user interfaces for their own needs. Although the primary driving force behind the original development of VisIt was for visualizing ASCI terascale data, VisIt has also proven to be well suited for visualizing smaller scale data from simulations on desktop systems. With respect to in situ processing, Brad Whitlock used the VisIt source code to make "LibSim," which was an in situ version of VisIt. Finally, Hank's Ph.D. dissertation was built around the research he performed while helping to develop the tool, including a contract-based system to adapt which optimizations were applied based on the properties of a data flow network. CDUX People

Publications

|